Assembly Sequence Planning

Disassembly Tree Search

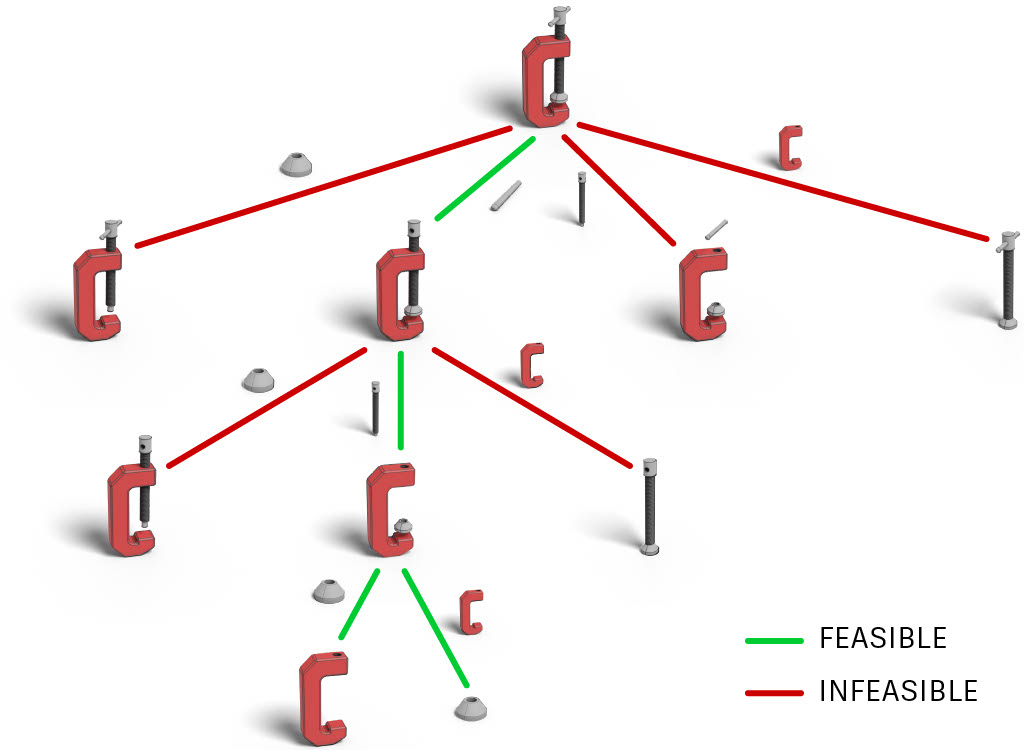

We apply the idea of assembly-by-disassembly to obtain the assembly sequence from the reverse order of its disassembly sequence with much less complexity.

We formulate the disassembly sequence planning as a tree-search framework where established techniques can be applied to search for feasible disassembly sequences with a constrained evaluation budget.

A feasible tree expansion is conditioned on many constraints, which we take into consideration:

- Path constraint: A penetration-free path must exist to disassemble the part.

- Stability constraint: The remaining subassembly must be stable after the part being disassembled. Otherwise, the unstable parts must be held by grippers or supporting surface.

- Execution constraint: The assembly process must be executable given the available robotic manipulators.

Part Selection

Given a certain sub-assembly during the tree search, to decide which part to disassemble, we devise different selection strategies for search efficiency:

- Geometric heuristic: We observe that parts on the outside of an assembly are usually easier to remove due to fewer precedence constraints, therefore we prioritize selecting parts from outside in. It is also possible to proritize parts with small volume or few adjacent parts.

- Learning-based: We implement a graph neural network that takes as input the assembly graph and outputs a probability distribution over all parts, indicating the likelihood of each part being next in the disassembly sequence. The model is trained on thousands of assemblies labeled automatically using physics-based simulation.

Pose Selection

We search for the most stable pose when the assembly is placed on a support surface, with guidance from a quasistatic pose estimator.

We additionally propose a pose reuse technique that reduces reorientations of the planned sequences to keep visual consistency and improve the success rate.

Gravitational Stability Check

To verify the physical-feasibility of the assembly sequence, we developed an efficient gravitational stability check algorithm for multi-part contact-rich assemblies. Given an assembly under a specific pose, the algorithm outputs the set of parts needed to be held to make the assembly stable.

Our proposed stability check algorithm iteratively determines unstable parts to be held until the assembly becomes stable, which shows an order of magnitude speed up, avoids the combinatorial complexity and has a reasonable accuracy.